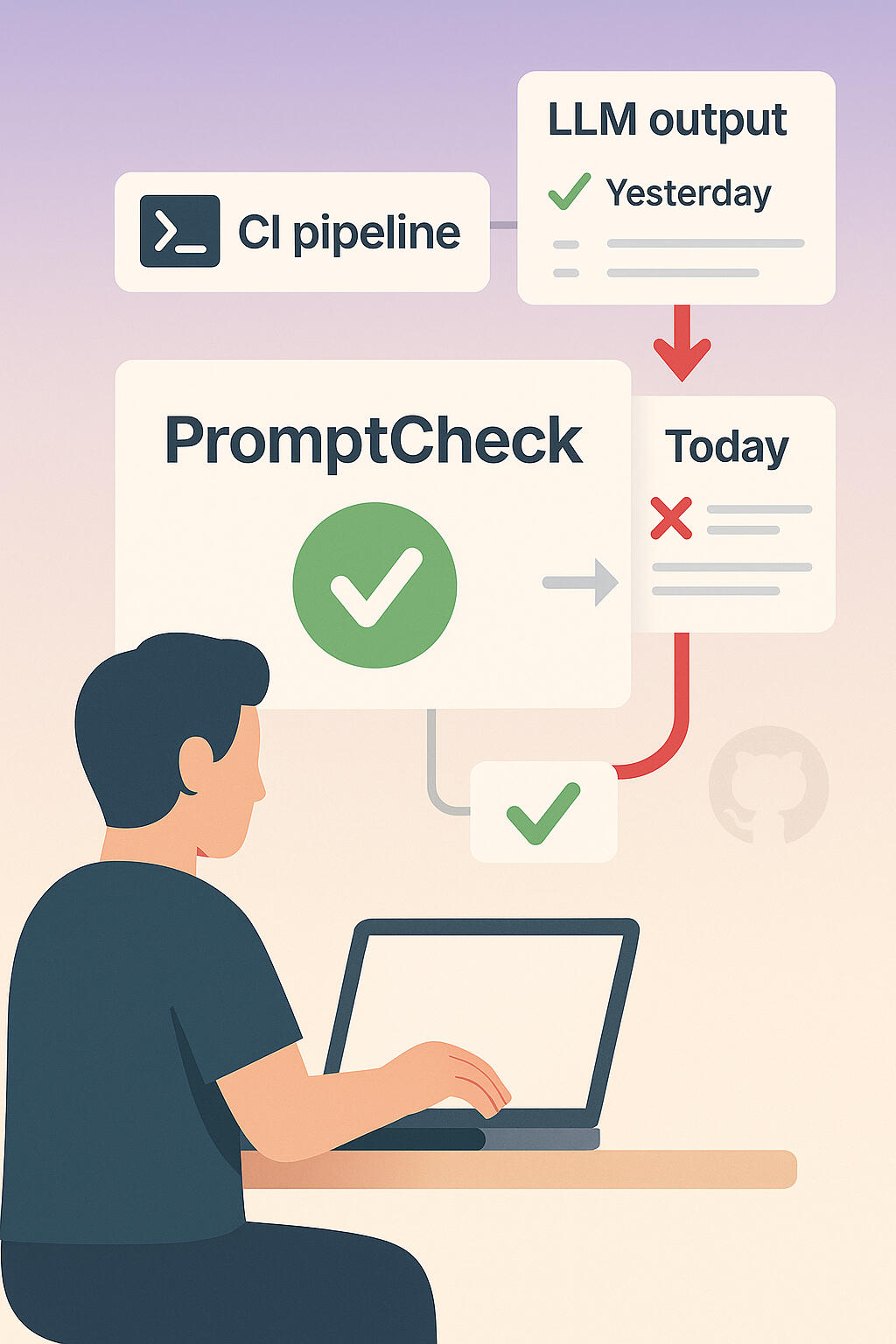

PromptCheck ✅

CI-first Prompt Testing for LLMs (OpenAI, Claude, GPT-4)

PromptCheck runs automated tests on your prompts in CI — so you catch failures before they reach production.

Stop Prompt Failures from Reaching Production

LLM outputs can change unexpectedly – what worked yesterday might fail today. PromptCheck brings automated prompt testing into your CI pipeline, so you catch these regressions before they affect users. Think of it as unit tests for your AI prompts, running on every pull request.

How It Works (3 Steps)

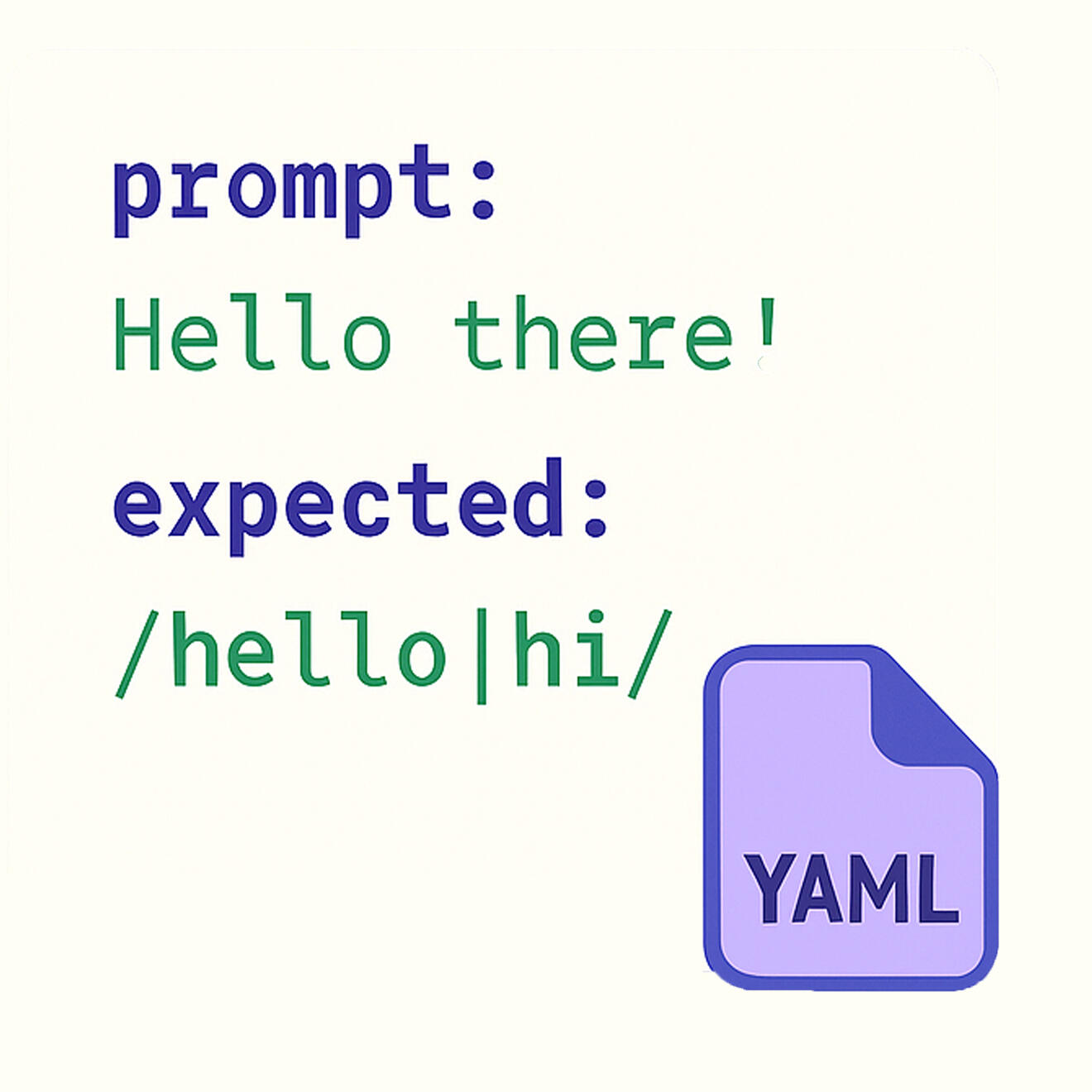

Write Prompt Tests in YAML

Describe expected outputs with regex or text match.

Run Automatically in GitHub CI

Eval runs on every PR. If outputs change unexpectedly, you get a ❌ in your PR.

Fix Prompt. Merge with Confidence.

You keep LLM behavior stable — even if models change.

Features Snapshot

| Feature | Free Beta | PromptCheck Pro |

|---|---|---|

| ✅ LLM prompt regression tests. | Yes | Yes |

| ⚙️ GitHub Action integration | Yes | Yes |

| 🔍 Regex, exact match metrics | Yes | Yes |

| 📄 YAML config + CLI runner | Yes | Yes |

| 📬 PR comment summary | Yes | Yes |

| 🔐 Pro Features | Slack Alerts, dashboards, usage tracking | COMING SOON |